Tesla Autopilot Error: Train Tracks Seen As Road By System

Introduction: The Incident in Woodland

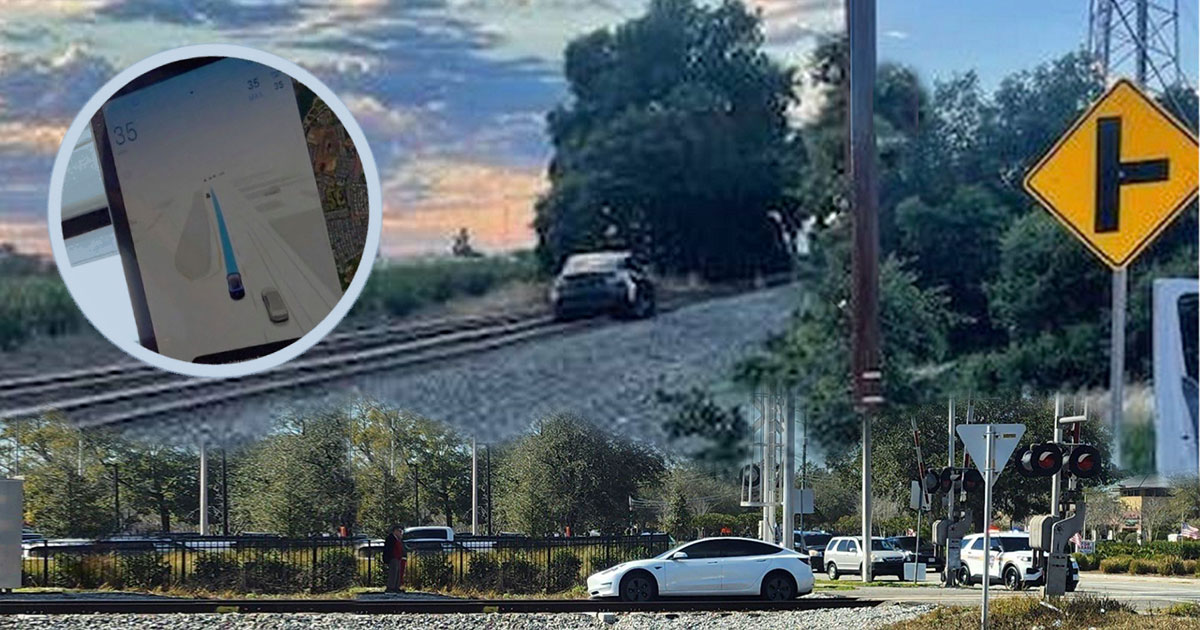

Woodland, California, a small city just 15 miles outside of Sacramento, witnessed a troubling incident that serves as a stark reminder of the limitations of Tesla’s Autopilot feature. The Woodland Police Department issued a warning to Tesla drivers to remain vigilant while using the Autopilot system, following an incident where the vehicle’s advanced driver assistance system mistook train tracks for a road.

A Close Call with Danger

On an otherwise ordinary day, a Tesla driver in Woodland experienced an extraordinary and dangerous situation. While cruising with the Autopilot feature engaged, the vehicle started to veer towards train tracks, misidentifying them as a road. The driver, perhaps lulled into a false sense of security by the advanced technology, didn’t immediately notice the error. This could have led to a catastrophic accident had it not been for the driver to eventually take control.

The Realities of Tesla’s Autopilot System

Tesla’s Autopilot is marketed as an advanced driver assistance system that promises to make driving easier and safer. However, incidents like the one in Woodland highlight that it is far from infallible. According to reports, there have been over 200 crashes and 29 deaths involving Tesla’s Autopilot. These numbers are sobering reminders that despite the impressive technology, human oversight remains crucial.

A History of Missteps

This isn’t the first time Tesla’s Autopilot has found itself in a precarious situation. Two months ago in Ohio, a Tesla using the supposedly more advanced Full Self-Driving (FSD) system had a close encounter with a speeding train. The driver managed to regain control just in time but still crashed into the railroad crossing arm. Alarmingly, this wasn’t the driver’s first brush with danger; he had narrowly avoided a similar incident earlier in the year.

The Technology Behind Tesla’s Autopilot

Tesla’s Autopilot and Full Self-Driving systems are at the forefront of autonomous vehicle technology. These systems rely on a suite of cameras, ultrasonic sensors, and radar to perceive the environment. Machine learning algorithms process this data to make driving decisions. In theory, this should create a seamless and safe driving experience. However, the real-world application reveals the gaps and challenges that still need to be addressed.

The Perception Problem

One of the key issues in the Woodland incident was the vehicle’s perception system. Autopilot mistook the train tracks for a road, an error that points to a significant flaw in the system’s object recognition capabilities. While Tesla’s sensors and algorithms are designed to detect and classify various elements of the road environment, they are not yet perfect. Conditions such as lighting, weather, and even the nature of the surroundings can impact the system’s accuracy.

Overreliance on Technology

A growing concern among experts is the tendency of drivers to over-rely on these autonomous systems. Tesla’s marketing often emphasizes the advanced capabilities of Autopilot and Full Self-Driving, which might lead some drivers to believe that the system can handle more than it actually can. This overreliance can reduce driver vigilance, increasing the risk of accidents when the technology inevitably encounters a situation it cannot handle correctly.

The Human Factor

The incidents in Woodland and Ohio underscore the indispensable role of human drivers. No matter how advanced the technology, human intervention is often required to prevent accidents. Drivers must remain attentive and ready to take control at a moment’s notice. The idea of a fully autonomous car is enticing, but the current reality is that we are not there yet.

The Regulatory Landscape

Regulatory bodies are increasingly scrutinizing autonomous driving technologies. In the United States, the National Highway Traffic Safety Administration (NHTSA) has been investigating several crashes involving Tesla’s Autopilot. The outcomes of these investigations could lead to stricter regulations and more robust safety standards for autonomous vehicles.

Potential Legal Implications

The legal ramifications of accidents involving autonomous driving systems are complex. Questions about liability—whether the driver or the manufacturer is at fault—are still being debated in courts. These legal challenges will shape the future of autonomous driving regulations and influence how manufacturers design and market their systems.

The Road Ahead for Tesla

Tesla continues to push the envelope with its autonomous driving technology. CEO Elon Musk frequently touts the potential of Autopilot and Full Self-Driving, promising future updates that will improve safety and reliability. However, incidents like the one in Woodland highlight that there is still a long way to go.

Improving Sensor Technology

One of the paths forward is improving sensor technology. More accurate and robust sensors could help reduce the likelihood of errors like mistaking train tracks for roads. Lidar, which uses laser pulses to create detailed 3D maps of the environment, is one such technology that could enhance Tesla’s systems, although Musk has been famously critical of it.

Enhanced Machine Learning Algorithms

Another avenue for improvement lies in the machine learning algorithms that power Tesla’s autonomous systems. By feeding the system more diverse and comprehensive data, engineers can train the algorithms to better recognize and respond to a wider range of scenarios. This continuous learning process is essential for developing truly reliable autonomous vehicles.

The Role of Driver Education

Educating drivers about the limitations and proper use of Autopilot and Full Self-Driving is crucial. Tesla and other manufacturers need to ensure that users understand that these systems are not foolproof and require constant vigilance. Clear communication about the capabilities and limitations of autonomous driving technology can help prevent accidents.

Conclusion: Balancing Innovation and Safety

The incident in Woodland serves as a crucial reminder that while autonomous driving technology is advancing rapidly, it is not yet infallible. Drivers must remain engaged and vigilant, ready to take control when necessary. Tesla’s vision of a future with fully autonomous vehicles is ambitious, but the journey requires balancing innovation with safety.

As we look to the future, it is essential for manufacturers, regulators, and drivers to work together. By improving technology, enacting thoughtful regulations, and maintaining driver awareness, we can pave the way for a safer and more reliable autonomous driving experience. Until then, the incidents in Woodland and Ohio remind us that we are still in the early stages of this technological revolution, and caution must be our co-pilot.